NVENC & Framebuffer Sizing

Coming from K2 based servers, we did not have the GPU monitoring capabilities or NVENC in our existing VDI servers. That being said, the new monitoring features available on Maxwell and Pascal series GPUs have exposed some interesting sizing considerations when using NVENC.

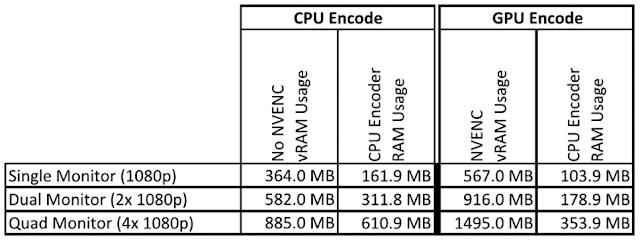

Turns out (and this make total sense) that the encode process is using additional video RAM over what it was using without NVENC. Using RDanalyzer I looked at the encoder memory usage for Single, Dual, and Quad monitor systems using both CPU and GPU encode. See the table below:

Takeaways:

- The NVENC vRAM/FB usage increase is approximately equal to the CPU Encoder RAM Usage without NVENC. This would be good enough for sizing...

- There is a savings in CPU encoder RAM when using NVENC, but there is still RAM utilization of the CPU encoder (assuming for redirection, sound, printers, etc) especially with more monitors.

- Not listed, but in all of these scenarios, the CPU usage for encode goes from a full core a times to 0-2%.

As a shameless plug, this is one of the things I will be presenting as part of our vGPU and VDI story at GTC 2018 in San Jose. Use code GMSGTC18 to save 20% off registration. nvda.ws/2iC4Bry

Interesting read, this is one of the reasons why you can't enable NVENC on the lower end vGPU models, and you need at least 1 GB vGPU RAM.

ReplyDelete