Issues after forcibly shutting down a vGPU enabled Machine - Reset GPU on Xenserver

Had an odd scenario today where a VM with vGPU enabled on XenServer became unresponsive and would not restart/shutdown, force shutdown/reset, or really do anything. So I had to reference a handful of Citrix articles to remember how to force it, but then came to another issue that it didn't kill the GPU portion... So here we go!

First we have to kill the VM on XenServer: (https://support.citrix.com/article/CTX220777 & https://support.citrix.com/article/CTX215974)

First we have to kill the VM on XenServer: (https://support.citrix.com/article/CTX220777 & https://support.citrix.com/article/CTX215974)

- Disable High Availability (HA) so you don’t run into issues

- Log into the Xenserver host that is running your VM with issues via ssh or console via XenCenter

- Run the following command to list VMs and their UUIDs

xe vm-list resident-on=<uuid_of_host> - First you can try just the normal shutdown command with force

xe vm-shutdown uuid=<UUID from step 3> force=true - If that just hangs, use CONTROL+C to kill it off and try to reset the power state. The force is required on this command

xe vm-reset-powerstate uuid=<UUID from step 3> force=true - If the VM is still not shutdown, we may need to destroy the domain

- Run this command to get the domain id of the VM. It is the number in the first row of output. The list will be the VMs on the host. Dom0 will be the host itself and all numbers after are running VM

list_domains - Now run this command using the domain ID from the output of step 7

xl destroy <Domain>

Now at this point this normally solves it... BUT I couldn't start anything on the vacated pGPU... SO we continue...

- Check the gpu using nvidia's built in tools by running

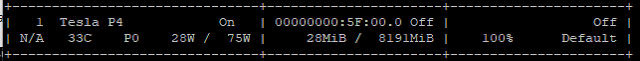

nvidia-smi This showed the GPU at 100% utilization with no processes running on it... - At this point we wanted to reset the GPU by using

nvidia-smi --gpu-reset -i * [replace * with number of the GPU in question from nvidia-smi]

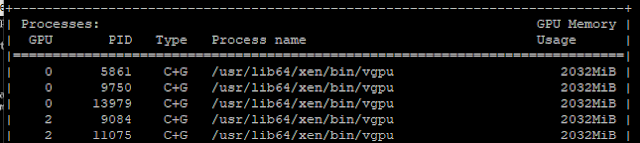

This did not work as it said it was in use by one more more processes... - Run this command to see processes not listed in nvidia-smi

fuser -v /dev/nvidia* - Normally we could try to kill any processes listed here by using

kill -9 PID - We only had a service listed (xcp-rrdd-gpumon). It would respawn if we killed it so we need to stop it using

service xcp-rrdd-gpumon stop - Then we checked processes again using the same command as 3

fuser -v /dev/nvidia* - Now there were no processes running and we could succesfully reset the GPU

nvidia-smi --gpu-reset -i * - Check nvidia-smi to ensure this worked and then restart the service we stopped

service xcp-rrdd-gpumon start

Also learned there are so many other cool features packed into nvidia-smi I hadn't noticed before when searching for solutions here. Maybe a learning journey for another day!

Comments

Post a Comment